Self-supervising Fine-grained Region Similarities

for Large-scale Image Localization

2. SenseTime Research 3. China University of Mining and Technology

Abstract

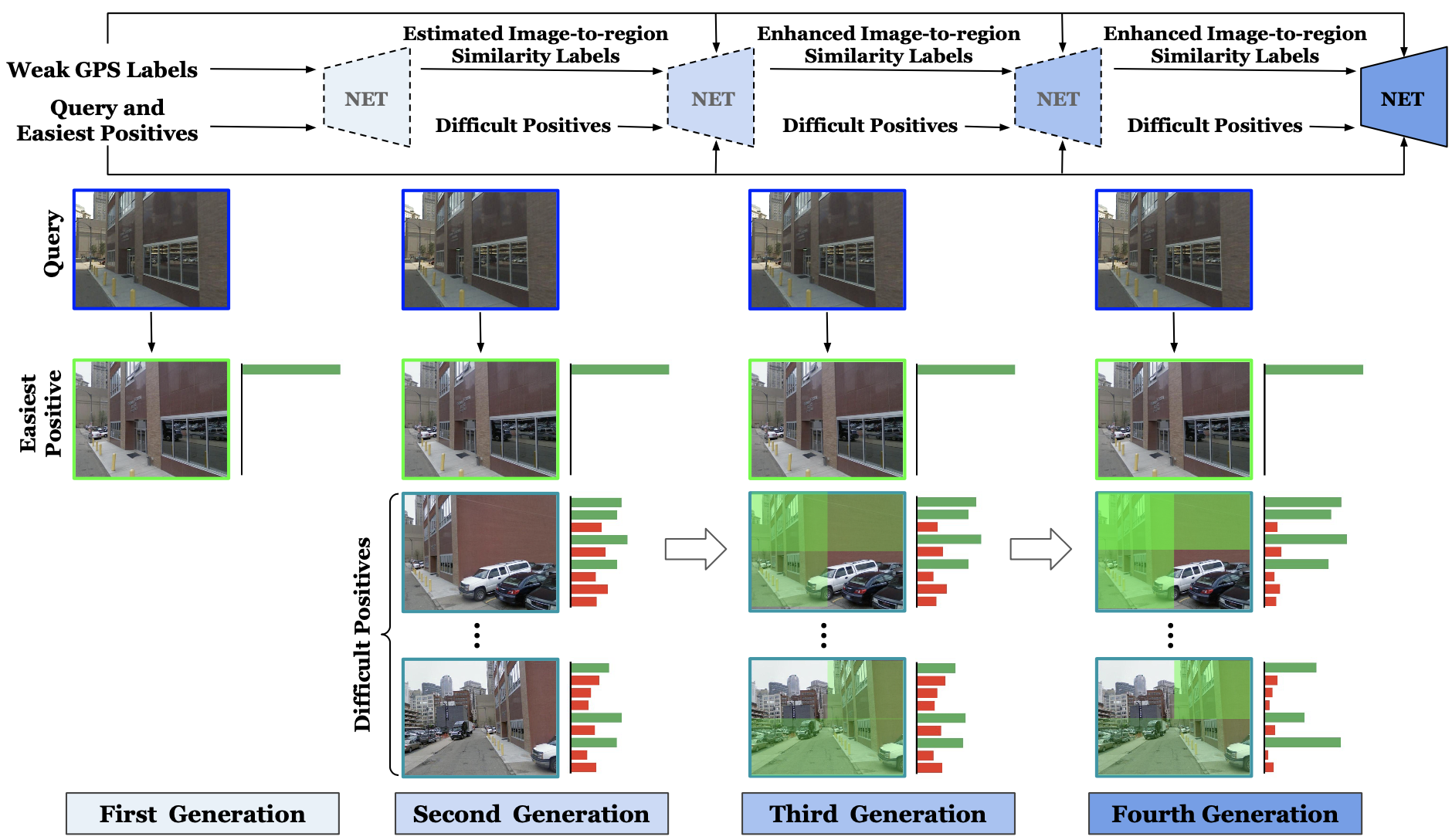

The task of large-scale retrieval-based image localization is to estimate the geographical location of a query image by recognizing its nearest reference images from a city-scale dataset. However, the general public benchmarks only provide noisy GPS labels associated with the training images, which act as weak supervisions for learning image-to-image similarities. Such label noise prevents deep neural networks from learning discriminative features for accurate localization. To tackle this challenge, we propose to self-supervise image-to-region similarities in order to fully explore the potential of difficult positive images alongside their sub-regions. The estimated image-to-region similarities can serve as extra training supervision for improving the network in generations, which could in turn gradually refine the fine-grained similarities to achieve optimal performance. Our proposed self-enhanced image-to-region similarity labels effectively deal with the training bottleneck in the state-of-the-art pipelines without any additional parameters or manual annotations in both training and inference. Our method outperforms state-of-the-arts on the standard localization benchmarks by noticeable margins and shows excellent generalization capability on multiple image retrieval datasets.

Spotlight Presentation

Materials

Citation

@inproceedings{ge2020self,

title={Self-supervising Fine-grained Region Similarities for Large-scale Image Localization},

author={Yixiao Ge and Haibo Wang and Feng Zhu and Rui Zhao and Hongsheng Li},

booktitle={European Conference on Computer Vision}

year={2020},

}